💌 DATENeRF: Depth-Aware Text-based Editing of NeRFs 💌

a starry night canvas

a metallic vase

a b&w checkered pattern table

a teddy bear with a rainbow

a Corgi

Abstract

Recent diffusion models have demonstrated impressive capabilities for text-based 2D image editing. Applying similar ideas to edit a NeRF scene remains challenging as editing 2D frames individually does not produce multiview-consistent results.

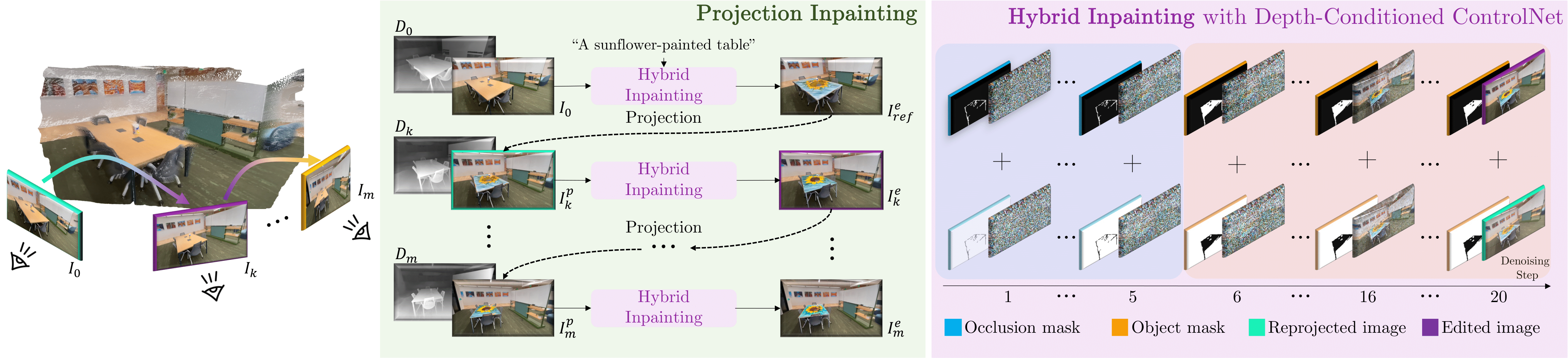

We make the key observation that the geometry of a NeRF scene provides a way to unify these 2D edits. We leverage this geometry in depth-conditioned ControlNet to improve the consistency of individual 2D image edits. Furthermore, we propose an inpainting scheme that uses the NeRF scene depth to propagate 2D edits across images while staying robust to errors and resampling issues.

We demonstrate that this leads to more consistent, realistic and detailed editing results compared to previous state-of-the-art text-based NeRF editing methods.

Overview

Convergence Speed.

'a panda'

Instruct-NeRF2NeRF + masks

Ours Without Projection

Ours

Edge-Conditioned

'A Fauvism painting'

'An Edvard Munch painting'

'Vincent Van Gogh'

3D Object Compositing

'a brown cowboy hat'

'a plaid cowboy hat'

'a metallic cowboy hat'

Scene Editing

'Van Gogh-Style garden'